Evaluating the Efficacy of Language Models: A Comprehensive Examination of Testing Methods

Related Articles: Evaluating the Efficacy of Language Models: A Comprehensive Examination of Testing Methods

Introduction

With great pleasure, we will explore the intriguing topic related to Evaluating the Efficacy of Language Models: A Comprehensive Examination of Testing Methods. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

Evaluating the Efficacy of Language Models: A Comprehensive Examination of Testing Methods

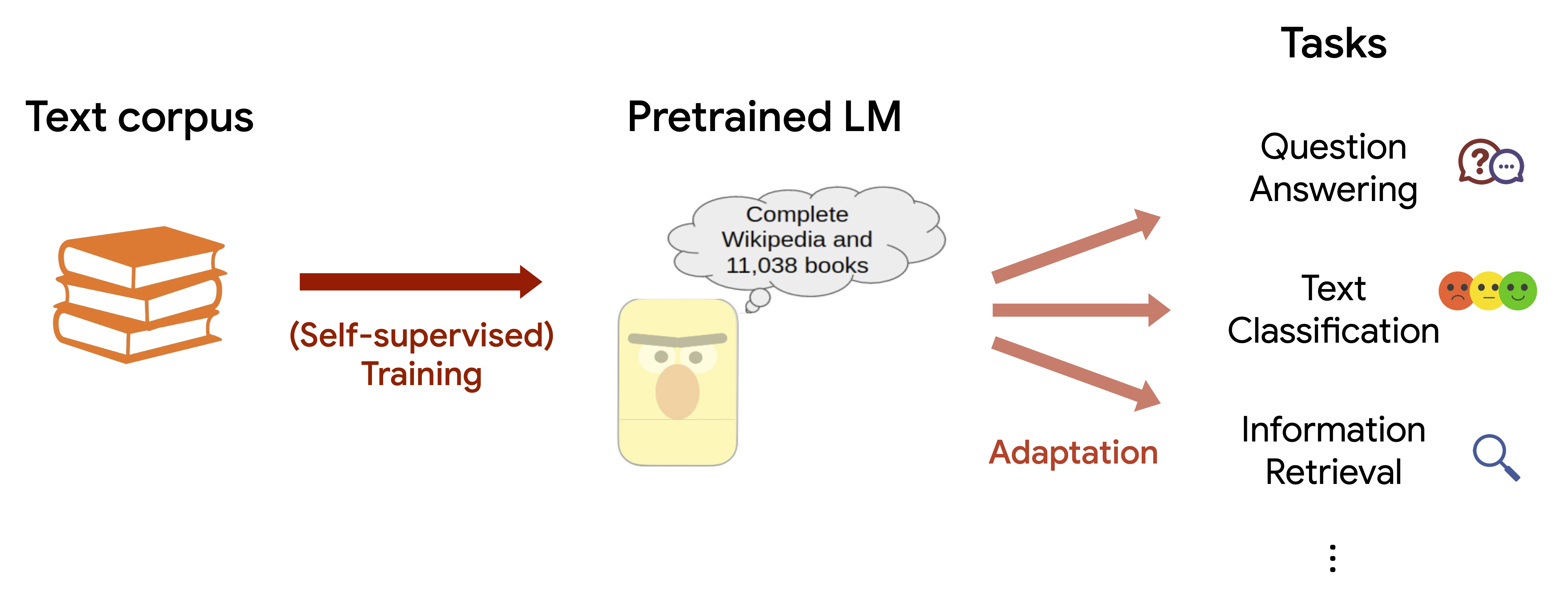

The rapid advancement of language models (LMs) has ushered in a new era of artificial intelligence (AI) capabilities. These models, trained on vast datasets of text and code, exhibit remarkable abilities in tasks such as text generation, translation, summarization, and code completion. However, assessing the true efficacy of these models remains a critical challenge. This article delves into the multifaceted process of evaluating LMs, exploring various testing methodologies and their strengths and limitations.

The Importance of Rigorous Evaluation:

Evaluating LMs is not merely a matter of academic curiosity; it is essential for several reasons:

- Understanding Model Performance: Testing allows for quantifying the strengths and weaknesses of an LM, providing insights into its capabilities and limitations. This information is crucial for developers to identify areas for improvement and optimize model performance.

- Identifying Bias and Fairness: LMs are trained on data that reflects societal biases. Testing helps uncover these biases and ensure that models are fair and equitable in their outputs.

- Ensuring Safety and Reliability: As LMs are increasingly integrated into various applications, it is crucial to ensure their safety and reliability. Testing helps identify potential risks and vulnerabilities, enabling developers to address them proactively.

- Guiding Future Research: Evaluation results provide valuable data for researchers to understand the limitations of current models and guide the development of more robust and reliable LMs in the future.

Testing Methodologies:

A diverse range of testing methodologies are employed to evaluate LMs, each with its unique strengths and limitations. These methods can be broadly categorized as follows:

1. Human Evaluation:

Human evaluation involves assessing the quality of LM outputs through human judgment. This method is particularly valuable for tasks where subjective evaluation is essential, such as text generation, translation, and summarization.

Types of Human Evaluation:

- Direct Comparison: Human evaluators are presented with pairs of outputs (one from the LM and one from a human-generated baseline) and asked to choose the better one.

- Rating Scales: Evaluators rate LM outputs on various criteria, such as fluency, coherence, accuracy, and relevance, using scales like Likert scales or numerical ratings.

- Open-Ended Feedback: Evaluators provide free-form textual feedback on the strengths and weaknesses of the LM outputs.

Advantages:

- Subjective Assessment: Captures human perception of quality, which is often difficult to quantify objectively.

- Real-World Relevance: Reflects how humans actually experience and interact with LM outputs.

Disadvantages:

- Subjectivity: Evaluators’ judgments can be influenced by personal biases and interpretations.

- Cost and Time: Human evaluation is labor-intensive and can be time-consuming.

2. Automatic Evaluation:

Automatic evaluation uses computer-based metrics to assess LM outputs. This approach is often faster and more scalable than human evaluation, making it suitable for large-scale testing.

Common Automatic Metrics:

- BLEU (Bilingual Evaluation Understudy): Measures the overlap between LM outputs and reference translations.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): Evaluates the recall of key phrases and sentences in summarization tasks.

- Perplexity: Measures the model’s ability to predict the next word in a sequence.

Advantages:

- Objectivity: Provides quantitative measures that are less prone to subjective biases.

- Efficiency: Automatic evaluation is faster and more scalable than human evaluation.

Disadvantages:

- Correlation with Human Judgment: Automatic metrics may not always correlate well with human perception of quality.

- Limited Scope: May not capture all aspects of quality that are relevant to humans.

3. Benchmarking:

Benchmarking involves comparing LM performance on standardized datasets and tasks. This approach allows for objective comparisons across different models and provides a standardized way to track progress in the field.

Popular Benchmarks:

- GLUE (General Language Understanding Evaluation): A benchmark for evaluating natural language understanding tasks.

- SuperGLUE (Super General Language Understanding Evaluation): A more challenging benchmark that includes tasks requiring reasoning and common sense.

- SQuAD (Stanford Question Answering Dataset): A benchmark for evaluating question answering systems.

Advantages:

- Standardization: Provides a common ground for comparing different models.

- Progress Tracking: Allows for monitoring progress in the field over time.

Disadvantages:

- Limited Scope: Benchmarks may not capture the full range of LM capabilities.

- Overfitting: Models may become overly optimized for specific benchmarks, leading to poor performance on other tasks.

4. Safety and Bias Testing:

Testing for safety and bias involves evaluating the potential risks and biases associated with LM outputs. This is particularly important for applications where LMs interact with humans or make decisions that could have real-world consequences.

Methods for Safety and Bias Testing:

- Adversarial Testing: Feeding the LM with carefully crafted inputs designed to elicit undesirable outputs.

- Bias Detection Tools: Using specialized tools to identify and quantify biases in LM outputs.

- Human-in-the-Loop Evaluation: Involving human evaluators to assess the potential impact of LM outputs on real-world situations.

Advantages:

- Proactive Risk Mitigation: Helps identify and address potential safety and bias issues before they cause harm.

- Ethical Considerations: Ensures that LMs are developed and deployed responsibly.

Disadvantages:

- Complexity: Testing for safety and bias can be challenging and resource-intensive.

- Ongoing Effort: Safety and bias testing needs to be an ongoing process, as models evolve and new risks emerge.

FAQs about Testing Language Models:

Q: What are the most important factors to consider when evaluating LMs?

A: The most important factors depend on the specific application of the LM. However, some common considerations include accuracy, fluency, coherence, relevance, safety, and bias.

Q: How can I ensure that my LM evaluations are reliable and trustworthy?

A: Employ a variety of testing methodologies, including human evaluation and automatic metrics. Ensure that your test datasets are representative of the intended use case and that your evaluation process is transparent and reproducible.

Q: How can I address biases in my LM outputs?

A: Use de-biasing techniques during training, such as data augmentation or adversarial training. Regularly test for biases and address them through model retraining or other interventions.

Q: What are the ethical implications of evaluating LMs?

A: It is essential to consider the potential impact of LM outputs on individuals and society as a whole. Ensure that your evaluation process is fair and equitable and that your models are not used in ways that could cause harm.

Tips for Effective LM Testing:

- Define clear evaluation goals and metrics: Clearly articulate what you are trying to measure and how you will quantify it.

- Use a variety of testing methodologies: Combine human evaluation with automatic metrics to obtain a comprehensive understanding of model performance.

- Ensure that your test datasets are representative: Use datasets that reflect the intended use case of the LM.

- Monitor model performance over time: Track changes in model performance as you make updates or modifications.

- Involve diverse perspectives: Solicit feedback from a range of stakeholders, including domain experts, users, and ethicists.

Conclusion:

Testing language models is a multifaceted and essential process that ensures the efficacy, safety, and reliability of these powerful technologies. By employing a diverse range of methodologies, including human evaluation, automatic metrics, benchmarking, and safety and bias testing, we can gain a comprehensive understanding of LM performance and guide the development of more robust and responsible AI systems. As LMs continue to evolve and integrate into various aspects of our lives, rigorous testing remains a critical pillar for ensuring that these technologies are used ethically and for the benefit of humanity.

Closure

Thus, we hope this article has provided valuable insights into Evaluating the Efficacy of Language Models: A Comprehensive Examination of Testing Methods. We hope you find this article informative and beneficial. See you in our next article!