Unveiling Hidden Structures in Data: A Comprehensive Guide to UMAP in TensorFlow

Related Articles: Unveiling Hidden Structures in Data: A Comprehensive Guide to UMAP in TensorFlow

Introduction

With great pleasure, we will explore the intriguing topic related to Unveiling Hidden Structures in Data: A Comprehensive Guide to UMAP in TensorFlow. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

Unveiling Hidden Structures in Data: A Comprehensive Guide to UMAP in TensorFlow

The ever-increasing volume and complexity of data necessitate powerful tools for its exploration and analysis. Traditional dimensionality reduction techniques, while valuable, often struggle to preserve the intricate relationships within high-dimensional datasets. Enter UMAP, a powerful and versatile algorithm that excels at revealing the underlying structure of complex data, even when dealing with massive datasets. This guide delves into the intricacies of UMAP, exploring its implementation within the TensorFlow framework and highlighting its potential to unlock hidden insights across various domains.

Understanding UMAP: A Journey Through the Dimensions

UMAP, short for Uniform Manifold Approximation and Projection, stands as a non-linear dimensionality reduction technique designed to preserve both local and global structure within data. Unlike its predecessors, UMAP excels at handling data exhibiting complex relationships, making it particularly suitable for applications in fields like:

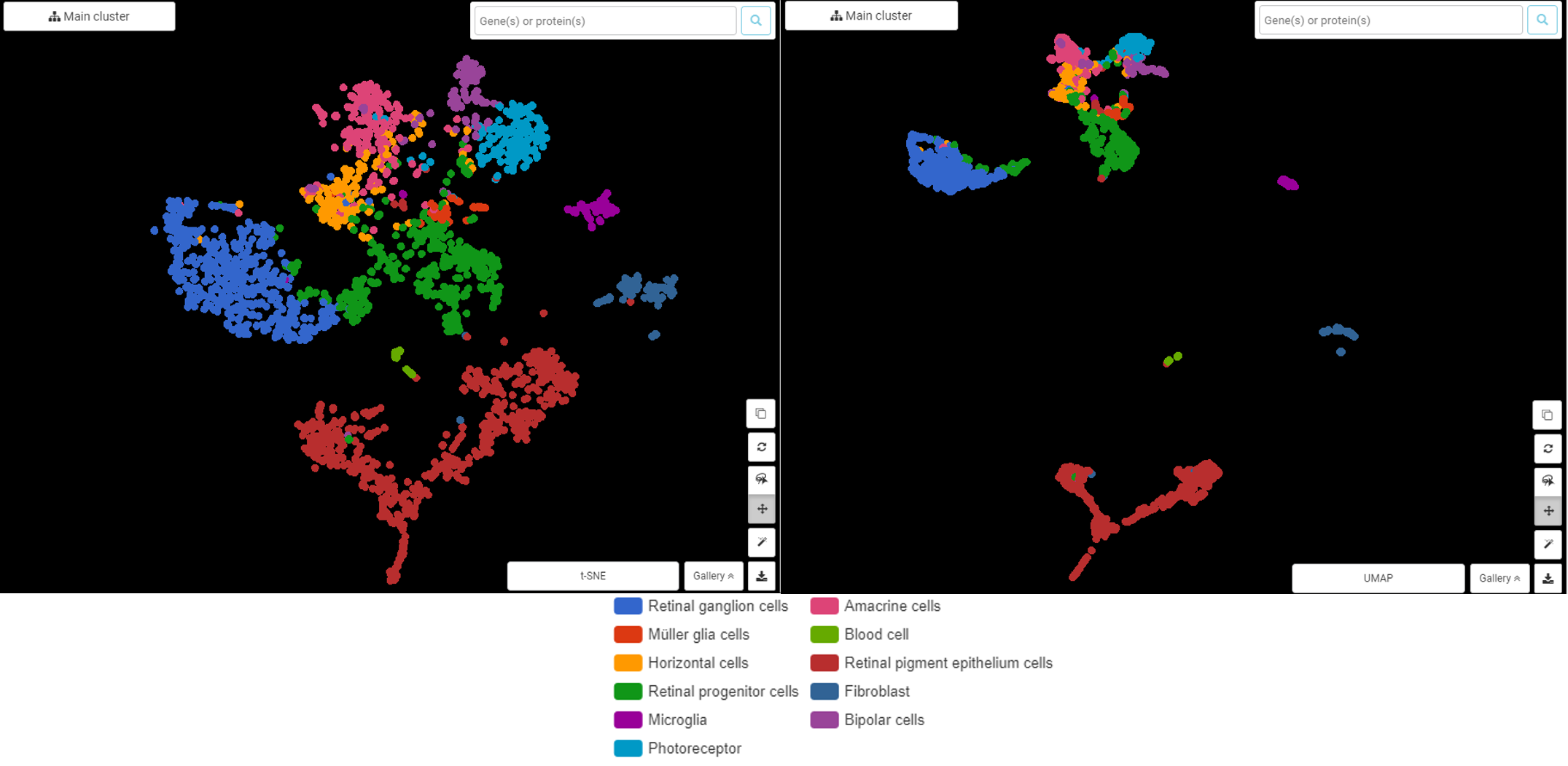

- Machine Learning: UMAP aids in visualizing high-dimensional data, facilitating model interpretation and feature selection.

- Bioinformatics: Analyzing large-scale genomic data, understanding gene expression patterns, and identifying disease biomarkers.

- Image Processing: Extracting meaningful features from images for tasks like image classification and retrieval.

- Natural Language Processing: Representing text data in lower dimensions for tasks like topic modeling and sentiment analysis.

The Essence of UMAP: A Journey of Topological Preservation

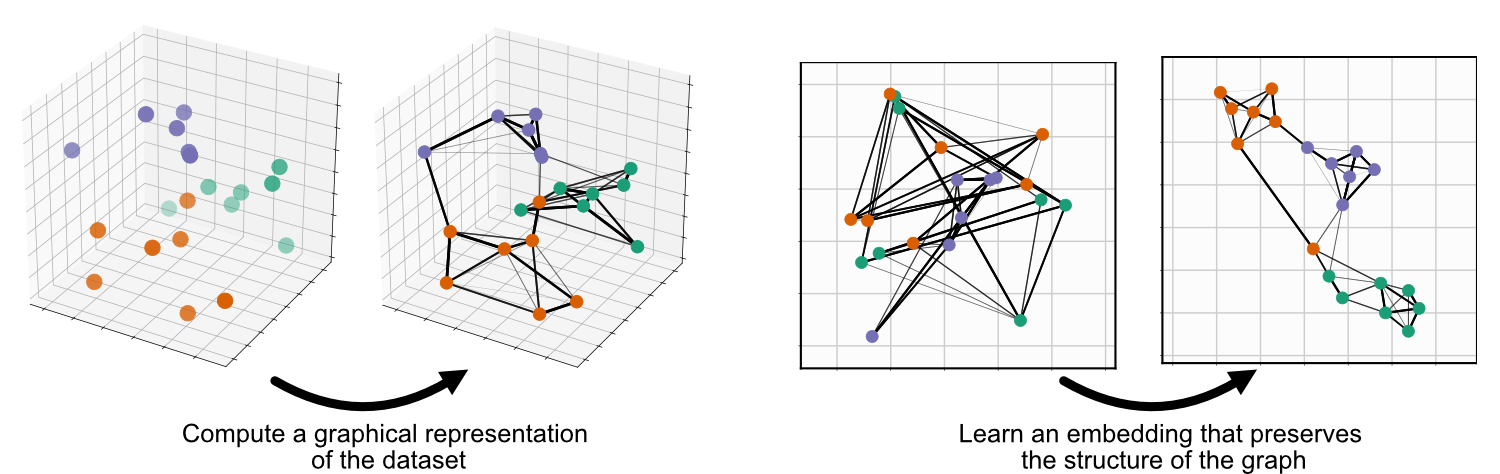

The core of UMAP lies in its ability to approximate the underlying manifold of the data, a mathematical construct that captures the inherent relationships between data points. This approximation is achieved through a two-step process:

-

Constructing a Neighborhood Graph: UMAP begins by building a graph that represents the local relationships between data points. This graph captures the proximity of points in the high-dimensional space, reflecting the local structure of the data.

-

Projecting the Graph: UMAP then projects this graph onto a lower-dimensional space, preserving the topological structure of the original data. This projection aims to maintain the relative distances and connections between points, allowing for meaningful visualization and analysis in a reduced dimension.

UMAP in TensorFlow: A Synergistic Partnership

TensorFlow, a widely adopted open-source machine learning framework, provides a powerful environment for implementing UMAP. This integration allows for efficient computation and seamless integration with other TensorFlow functionalities.

Key Advantages of UMAP in TensorFlow:

- Scalability: TensorFlow’s computational prowess allows UMAP to handle massive datasets with ease, enabling analysis of large-scale data in a timely manner.

- Flexibility: UMAP’s implementation within TensorFlow offers flexibility in customizing the algorithm’s parameters to optimize performance for specific datasets and tasks.

- Integration: UMAP’s integration with TensorFlow allows seamless integration with other machine learning models and pipelines, facilitating a comprehensive analysis workflow.

Implementing UMAP in TensorFlow: A Practical Guide

Implementing UMAP in TensorFlow is straightforward, thanks to the availability of dedicated libraries and resources. The following steps provide a general outline for incorporating UMAP into TensorFlow workflows:

-

Data Preparation: Load and prepare your data for analysis. This may involve scaling, normalization, or other preprocessing steps depending on the nature of your data.

-

UMAP Library Installation: Ensure the necessary UMAP library is installed within your TensorFlow environment. This can be achieved using the

pip install umap-learncommand. -

Model Initialization: Instantiate a UMAP object, specifying parameters like the number of desired dimensions, the neighborhood size, and other settings.

-

Model Training: Train the UMAP model on your data. This involves fitting the model to the data, allowing it to learn the underlying structure.

-

Dimensionality Reduction: Apply the trained UMAP model to your data to reduce its dimensionality. This yields a lower-dimensional representation of the data while preserving its key topological features.

-

Visualization and Analysis: Visualize and analyze the reduced-dimensional data using appropriate techniques like scatter plots, histograms, or other visualization tools.

FAQs: Addressing Common Queries

1. What are the key parameters to consider when using UMAP in TensorFlow?

- n_neighbors: Defines the number of nearest neighbors to consider when constructing the neighborhood graph. Higher values capture broader relationships, while lower values focus on local structures.

- n_components: Specifies the number of dimensions to reduce the data to. This choice depends on the desired level of dimensionality reduction and the specific application.

- min_dist: Controls the tightness of the embedding, influencing how close points are clustered in the lower-dimensional space.

- metric: Determines the distance metric used to measure the similarity between data points. Common choices include Euclidean distance, Manhattan distance, and cosine similarity.

2. How can I optimize UMAP performance for my specific dataset?

Experiment with different parameter settings to find the optimal configuration for your data. Employ techniques like grid search or random search to explore a range of parameter values and identify the best combination.

3. How can I interpret the results of UMAP in TensorFlow?

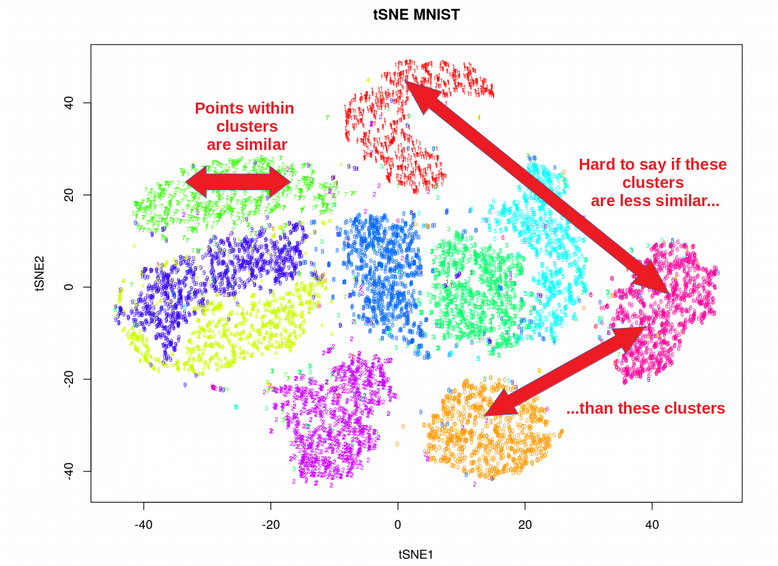

Visualize the reduced-dimensional data using scatter plots or other visualization tools. Look for clusters, outliers, and other patterns that reveal the underlying structure of the data. Additionally, consider using techniques like density estimation to identify regions of high data concentration.

4. Can I use UMAP in TensorFlow for time series data?

Yes, UMAP can be applied to time series data by converting it into a suitable representation, such as a time-lagged embedding. This allows UMAP to capture the temporal dependencies within the data.

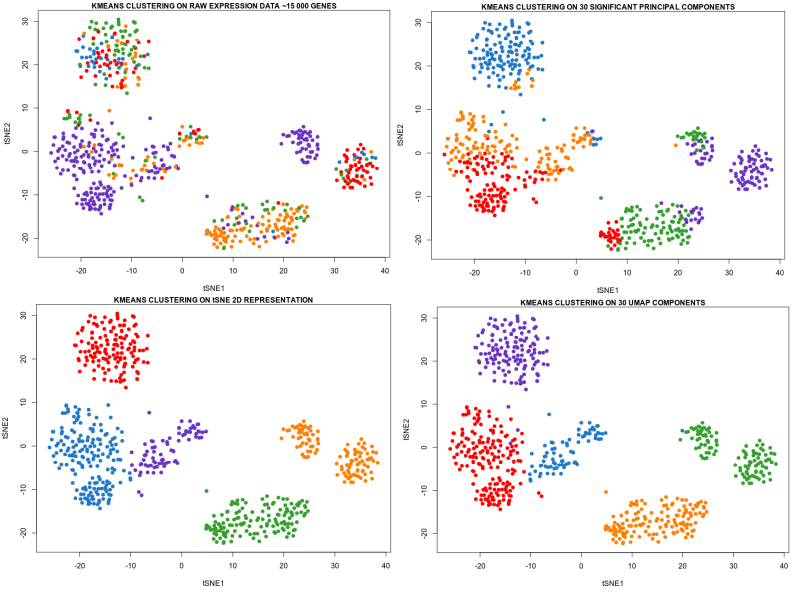

5. How does UMAP compare to other dimensionality reduction techniques?

UMAP offers advantages over traditional techniques like Principal Component Analysis (PCA) and t-SNE by preserving both local and global structure, handling non-linear relationships, and scaling better to large datasets.

Tips for Effective UMAP Implementation

- Data Preprocessing: Ensure your data is properly preprocessed to minimize noise and enhance the performance of UMAP.

- Parameter Tuning: Experiment with different parameter settings to find the optimal configuration for your specific dataset and application.

- Visualization: Utilize effective visualization techniques to gain insights from the reduced-dimensional data.

- Comparison: Compare the results of UMAP with other dimensionality reduction techniques to assess its effectiveness for your task.

Conclusion: Empowering Data Exploration and Analysis

UMAP, implemented within the powerful framework of TensorFlow, offers a transformative approach to dimensionality reduction. Its ability to preserve the underlying structure of data, even in high-dimensional spaces, empowers analysts to uncover hidden patterns, facilitate model interpretation, and gain deeper insights from complex datasets. By harnessing the capabilities of UMAP in TensorFlow, researchers and practitioners across various domains can unlock the full potential of their data, leading to advancements in understanding and decision-making.

Closure

Thus, we hope this article has provided valuable insights into Unveiling Hidden Structures in Data: A Comprehensive Guide to UMAP in TensorFlow. We hope you find this article informative and beneficial. See you in our next article!